Intro to Digital Signal Processing (DSP)

Est. read time: 7 minutes | Last updated: May 28, 2025 by John Gentile

Contents

Overview

Digital Signal Processing (DSP) provides a means for analyzing & constructing signals in the digital domain, often done in digital hardware or in software; as opposed to the analog domain, the continual progression of smaller and faster digital hardware allows for signal processing systems that are often cheaper, more reliable and even more adaptable than their hard-wired analog counterpart. Albeit practical, these benefits can sometimes come at the cost of precision due to the nature of converting between analog and digital domains. In practice though, intelligent application of DSP concepts can reduce these distortion effects, and in some cases, actually improve & condition the signal for an intended purpose.

Signals

A signal is a pretty general term; it can describe a set of data pertaining to almost any sort of application or industry- from the electrical signal passing internet bits around to stock ticker prices driving world markets. Systems process signals by modifying, passing and/or extracting information from them.

Fundamentally a signal is any quantity that varies with one or more independent variables- like voltage changing over time. A signal may or may not be able to be mathematically or functionally defined; for example, the natural waveform from recorded music. In the rest of the discussion time is used for discussion of common signals but can be replaced with any other independent variable. Frequency is inversely related to the period of a signal (). Common periodic signals that vary with time () can be described with three time-varying attributes: amplitude (), frequency () and phase (). For example a basic sinusoid:

Where is the frequency in (radians/sec) and related to Hz frequency, , (cycles/sec) by the simple conversion . Note a periodic signal satisfies the criteria where is any integer number. It’s also important to describe sinusoids in complex exponential form, also known as a phasor:

Which given the Euler identity, , gives:

Since the goal of DSP is to operate on signals within the digital domain, to deal with real/natural, analog signals we must digitize them; the common component that accomplishes this is unsurprisingly called an Analog to Digital Converter (ADC or A/D Converter). Most ADCs operate on electrical signals which come from a transducer or other electrical source. The inverse of an ADC is a Digital to Analog Converter (DAC or D/A Converter) which takes digital signals and produces an analog signal as an output. The conversion chain from analog to digital also provides an opportunity to explain further signal classifications, starting with continuous time vs discrete time signals.

Continuous Time vs Discrete Time

Continuous Time signals are synonymous with real analog signals. They take on defined values for the continuous interval such as the signal . Discrete Time signals have value only in certain instances of time, usually as a finite set of equidistant time intervals for easier calculations. For example the previous continuous time signal can be represented as a discrete time signal where

Similarly to the periodic definition of continuous time signals, a discrete time signal is periodic when where the smallest value of that holds true is the fundamental period. A sinusoidal signal with frequency is periodic when:

Since there’s the trigonetric identity that any sinusoid shifted by an integer multiple of is equal to itself (e.g. , we can reduce the discrete time sinusoid relation to having some integer such that:

The same identity (sinusoids seperated by an integer multiple of are identical) expressed means that when frequency (or ) the sequence is indistinguishable- called an alias- from a sinusoid with frequency . Thus discrete time sinusoids have the finite fundamental range of a single cycle or radians; usually this is given as a range , , , or .

In an ADC, the process of converting a signal from continuous time to discrete time is sampling and is commonly done at some regular sampling interval which is inverse of the sampling frequency . Continuous and discrete time are related in ideal, uniform sampling via Thus, sampling a sinusoidal signal gives:

Given the expression for this discrete time sampled sinusoid, the frequency ; substituting this frequency into the aforementioned fundamental range yields:

This is an important assertion that means the frequency of a continuous time analog signal must be half the sampling frequency to be uniquely distinguished (and not aliased). Furthermore, the highest frequency discernible .

The problem with alias signals can be demonstrated with the sinusoids:

Sampled at , the discrete time output signals are:

Given , and again, integer multiples of can be reduced out, and thus is aliased as an indistinguishable signal from . Therefore, to prevent the problem of aliasing, a proper sampling frequency must be chosen to satisfy the highest frequency content found in a signal:

This is known as the Nyquist Sampling Criterion.

Continuous Value vs Discrete Value

Similarly to continuous time, a signal is of Continuous Value if it can have any value, whether in a finite or infinite range; for instance, a certain voltage may realistically fall within the finite range of 0V to 5V but would be continuous value signal if it can take the value of any real voltage within that range (e.g. 2.31256…V). Conversely, a Discrete Value signal can only be within a finite set of values within a finite range (e.g. 1V integer levels within a 10V range). The process in an ADC of converting a continuous value, discrete time signal (output from the sampler) into a discrete value, discrete time signal is called quantization. A signal that is discrete in both time and value is considered a digital signal.

Linear Convolution

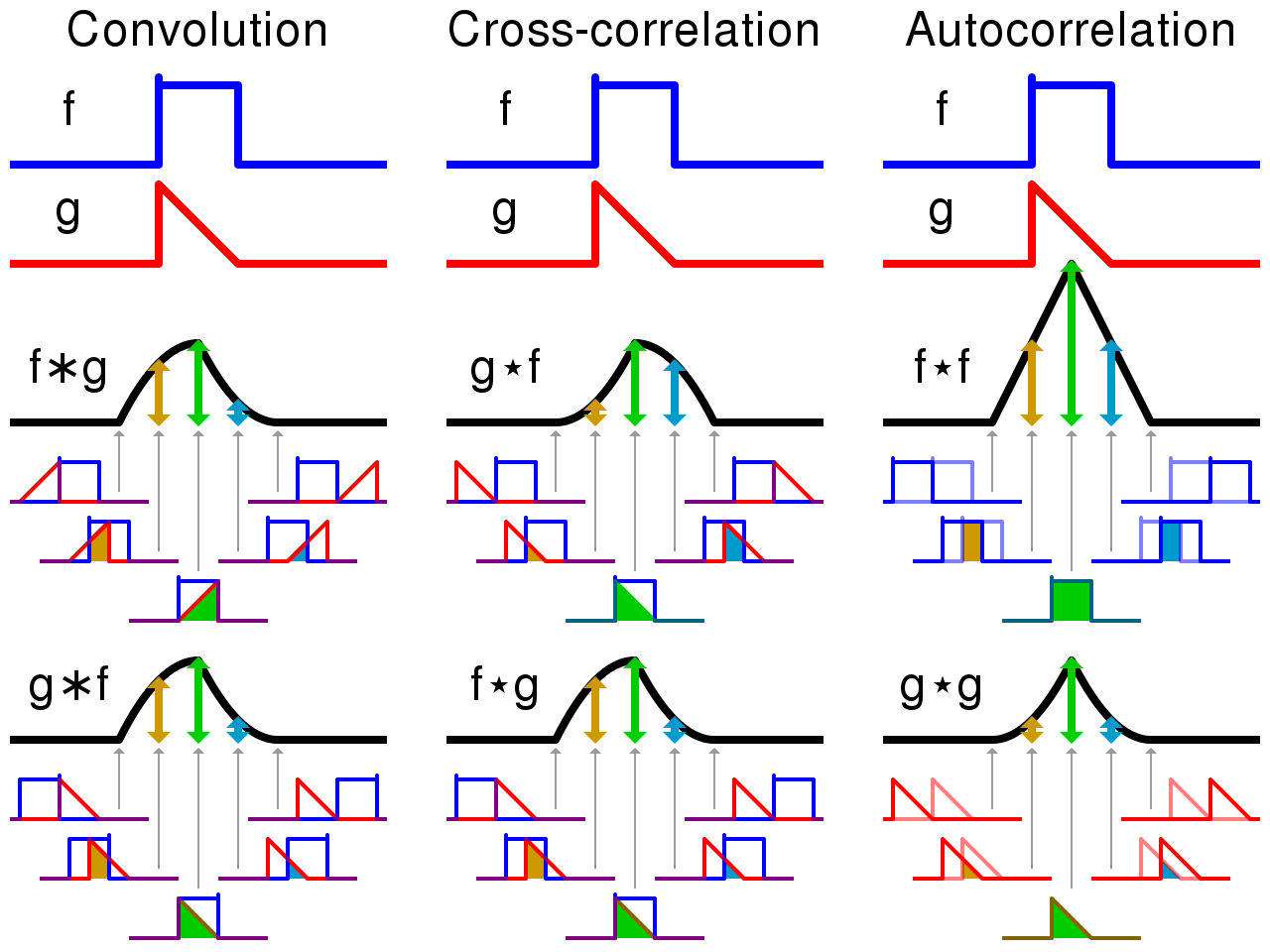

Visual comparison of convolution, cross-correlation and autocorrelation. From Wikipedia

Continuous-Time Convolution

A fundamental operation in signal processing is convolution. When a function is convolved with another function , a third output function expresses how the shape of one is modified by the other. Convolution is defined as the integral of the product of both functions, after one function is reversed and shifted; for example, in continuous time this is written as:

Convolution is also commutative, thus the above equation can also be expressed as:

A common convolution is that of a signal, , with a filter , to form a filtered output signal .

Geometrically, the convolution process can be seen as:

- Forming a time-reversed image of the filter function , where is a dummy variable.

- Sliding across the signal function with a specific delay .

- At each delay position , the integral of the product of and the part of the signal covered by the filter. This integral is also called the inner-product (or dot product).

Another property of convolution is that it is a linear operation; the superposition principle means that the convolution of the sum of input signals is equal to the sum of the individual convolutions. For instance, the equivalence can be shown with two input signals and to be convolved with a filter , where and are arbitrary constants:

Continuous-Time Correlation

Correlation (or sometimes noted as cross-correlation) is similar to convolution; it has the same geometrical property of a sliding inner-product, however the sliding function is not time reversed but instead, complex conjugated ().

Correlation is not commutative, however the equivalent of the above is:

NOTE: the second equation shows another way to denote the complex conjugate operation, thus and are equivalent.

Cross-correlation is commonly used for searching a long signal for a shorter, known feature.

Transforms

Fourier Series

The time domain function is shown here as a sum of six sinusoids at different amplitudes but odd harmonic frequencies (approximating a square wave). The resulting Fourier transform can be shown where the component sinusoiuds lie in the frequency domain and their relative amplitudes:

References

- Linear Systems and Signals, Second Edition- B.P. Lathi

- Digital Signal Processing Principles, Algorithms, and Applications, Fourth Edition- John G. Proakis, Dimitris G. Manolakis

- Digital Signal Processing- MIT OCW

- Linear Systems and Signals, 2nd Edition - B.P. Lathi